Sunday Poster Session

Category: Practice Management

P1493 - Assessing ChatGPT’s Accuracy and Reliability in Providing Dietary Counseling for Common Gastrointestinal Conditions

Sunday, October 27, 2024

3:30 PM - 7:00 PM ET

Location: Exhibit Hall E

Has Audio

Rabih Ghazi, MD

Cooper University Hospital

Philadelphia, PA

Presenting Author(s)

Rabih Ghazi, MD1, Thomas Judge, MD2, Apeksha Shah, MD3, Christina Tofani, MD, FACG4, Rachel Frank, MD5, Adib Chaaya, MD6, Krysta Contino, MD7

1Cooper University Hospital, Philadelphia, PA; 2Cooper Medical School of Rowan University, Mt. Laurel, NJ; 3Digestive Health Institute at Cooper University Hospital, Mt. Laurel, NJ; 4Digestive Health Institute at Cooper University Hospital, Camden, NJ; 5Cooper University Hospital, Mt. Laurel, NJ; 6Cooper Health Gastroenterology, Camden, NJ; 7Digestive Health Institute at Cooper University Hospital, Mount Laurel, NJ

Introduction: Artificial Intelligence (AI) is transforming various fields, including medicine. Chat Generative Pre-Trained Transformer (ChatGPT) is a readily accessible AI tool for patients seeking health advice. However, concerns about the accuracy and reliability of its information persist, potentially compromising patient safety. Effective dietary counseling is crucial for managing gastrointestinal (GI) conditions like gastroparesis, celiac disease, and irritable bowel syndrome. Hence, this study aims to assesses the accuracy, completeness, comprehensibility, and reliability of ChatGPT-4's responses to dietary questions about these GI conditions.

Methods: A new ChatGPT-4 account was created to gather responses to dietary inquiries for gastroparesis, celiac disease, and irritable bowel syndrome. The memory feature was disabled to ensure data integrity. Two dietary questions per condition were asked sequentially in the same chat session on May 4, 2024 (Table 1). A separate chat session was used for each GI condition. To assess for reliability, the same questions were asked again in a new chat session 5 days later after clearing the initial session data. Five gastroenterology physicians independently reviewed the responses and rated their accuracy, completeness, and comprehensibility on a 5-point Likert scale (Table 1). Outcomes were compared using Student’s t-test or ANOVA.

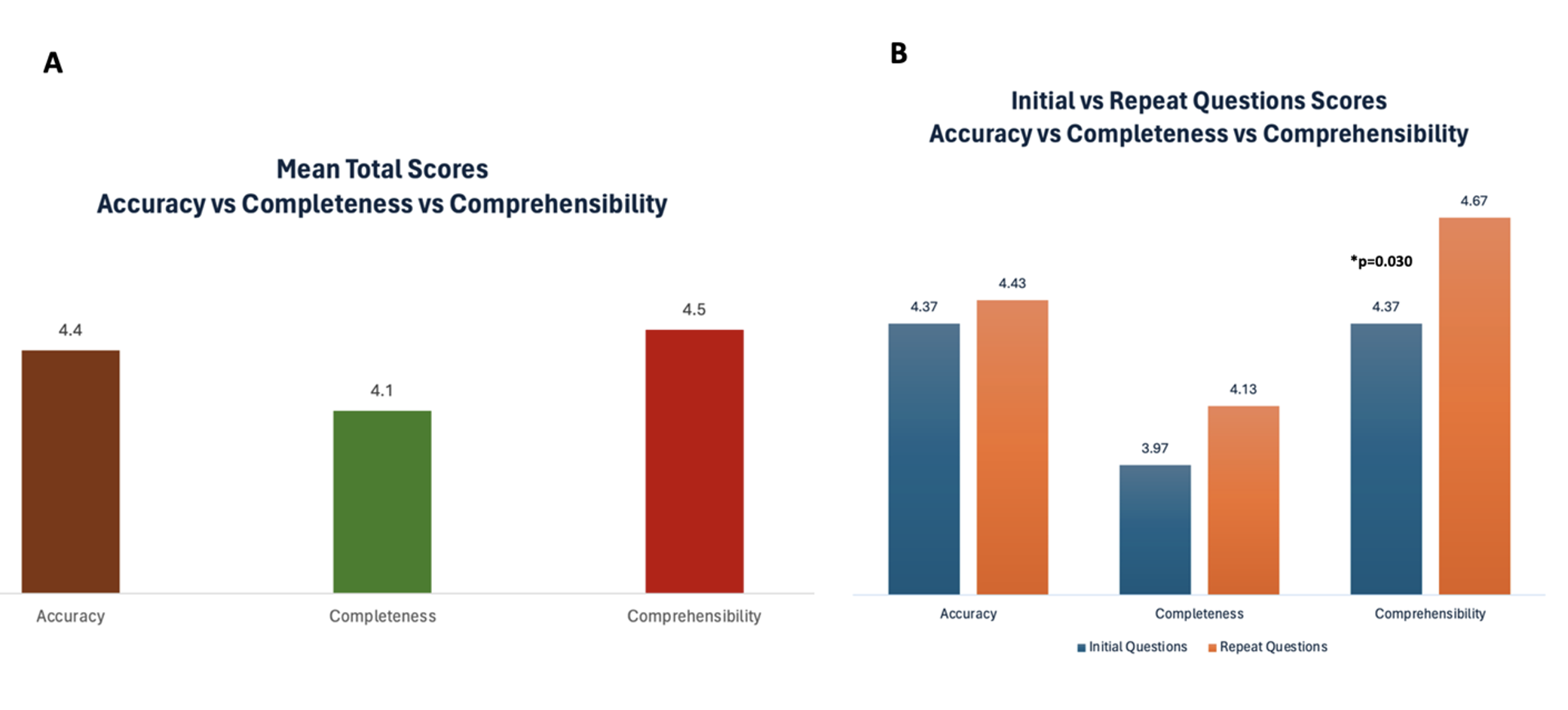

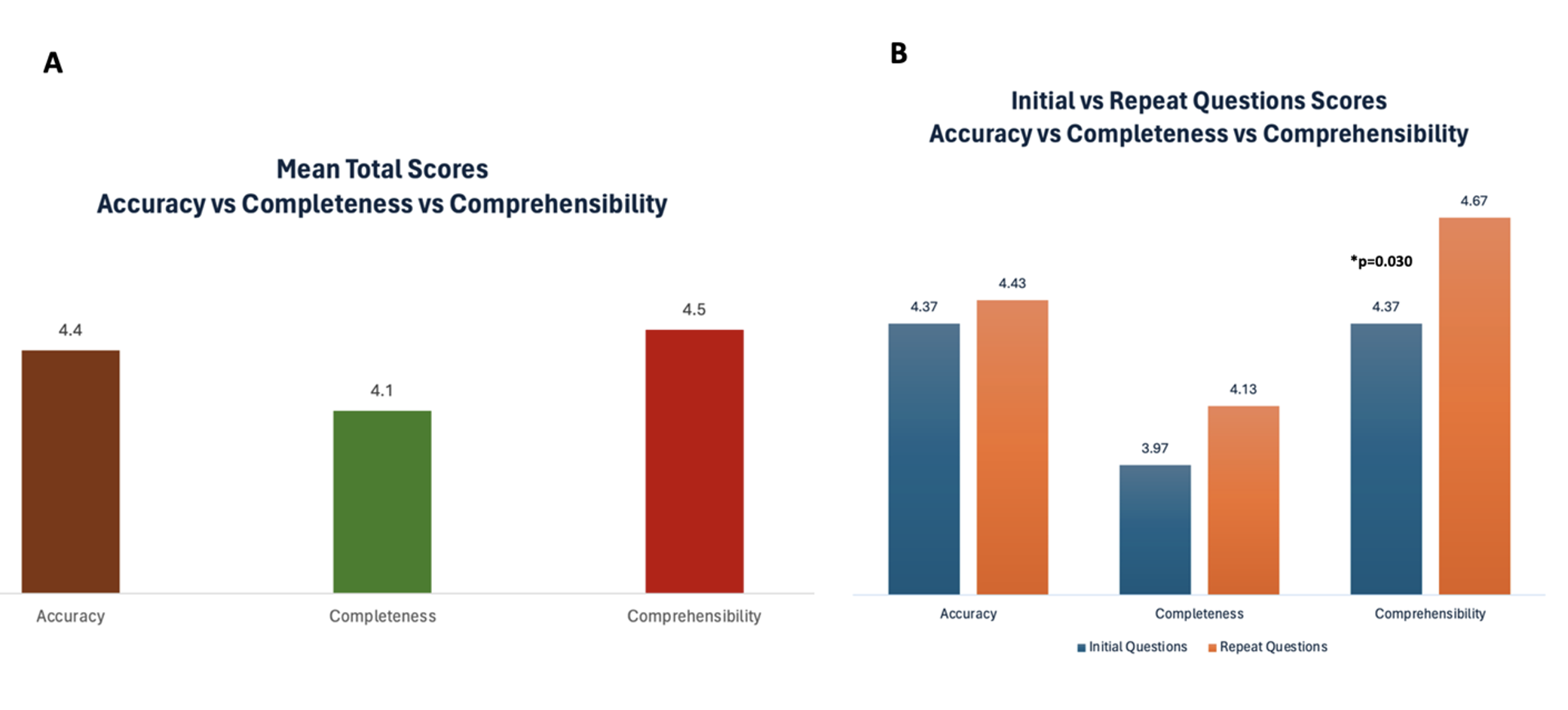

Results: A total of 180 responses were recorded for the accuracy, completeness, and comprehensibility of 12 ChatGPT diet-related answers (60 responses per category). The responses had a mean accuracy score of 4.4 ± 0.6, a completeness score of 4.1 ± 0.6, and a comprehensibility score of 4.5 ± 0.5 (Figure 1). Comparing the initial and repeat questions, there were no significant differences in mean accuracy (4.4 vs 4.4, p=0.648) and completeness (3.9 vs 4.1, p=0.304), but a difference in comprehensibility scores was noted (4.3 vs 4.6, p=0.030). Interestingly, the responses had a significantly lower mean completeness score compared to accuracy (p=0.001) and comprehensibility (p< 0.001).

Discussion: ChatGPT could be a useful resource for GI diet information as it provided accurate and comprehensible responses that were mostly complete. While generally reliable, a noted variation in the comprehensibility across repeated questions suggests the need for further studies.

Note: The table for this abstract can be viewed in the ePoster Gallery section of the ACG 2024 ePoster Site or in The American Journal of Gastroenterology's abstract supplement issue, both of which will be available starting October 27, 2024.

Disclosures:

Rabih Ghazi, MD1, Thomas Judge, MD2, Apeksha Shah, MD3, Christina Tofani, MD, FACG4, Rachel Frank, MD5, Adib Chaaya, MD6, Krysta Contino, MD7. P1493 - Assessing ChatGPT’s Accuracy and Reliability in Providing Dietary Counseling for Common Gastrointestinal Conditions, ACG 2024 Annual Scientific Meeting Abstracts. Philadelphia, PA: American College of Gastroenterology.

1Cooper University Hospital, Philadelphia, PA; 2Cooper Medical School of Rowan University, Mt. Laurel, NJ; 3Digestive Health Institute at Cooper University Hospital, Mt. Laurel, NJ; 4Digestive Health Institute at Cooper University Hospital, Camden, NJ; 5Cooper University Hospital, Mt. Laurel, NJ; 6Cooper Health Gastroenterology, Camden, NJ; 7Digestive Health Institute at Cooper University Hospital, Mount Laurel, NJ

Introduction: Artificial Intelligence (AI) is transforming various fields, including medicine. Chat Generative Pre-Trained Transformer (ChatGPT) is a readily accessible AI tool for patients seeking health advice. However, concerns about the accuracy and reliability of its information persist, potentially compromising patient safety. Effective dietary counseling is crucial for managing gastrointestinal (GI) conditions like gastroparesis, celiac disease, and irritable bowel syndrome. Hence, this study aims to assesses the accuracy, completeness, comprehensibility, and reliability of ChatGPT-4's responses to dietary questions about these GI conditions.

Methods: A new ChatGPT-4 account was created to gather responses to dietary inquiries for gastroparesis, celiac disease, and irritable bowel syndrome. The memory feature was disabled to ensure data integrity. Two dietary questions per condition were asked sequentially in the same chat session on May 4, 2024 (Table 1). A separate chat session was used for each GI condition. To assess for reliability, the same questions were asked again in a new chat session 5 days later after clearing the initial session data. Five gastroenterology physicians independently reviewed the responses and rated their accuracy, completeness, and comprehensibility on a 5-point Likert scale (Table 1). Outcomes were compared using Student’s t-test or ANOVA.

Results: A total of 180 responses were recorded for the accuracy, completeness, and comprehensibility of 12 ChatGPT diet-related answers (60 responses per category). The responses had a mean accuracy score of 4.4 ± 0.6, a completeness score of 4.1 ± 0.6, and a comprehensibility score of 4.5 ± 0.5 (Figure 1). Comparing the initial and repeat questions, there were no significant differences in mean accuracy (4.4 vs 4.4, p=0.648) and completeness (3.9 vs 4.1, p=0.304), but a difference in comprehensibility scores was noted (4.3 vs 4.6, p=0.030). Interestingly, the responses had a significantly lower mean completeness score compared to accuracy (p=0.001) and comprehensibility (p< 0.001).

Discussion: ChatGPT could be a useful resource for GI diet information as it provided accurate and comprehensible responses that were mostly complete. While generally reliable, a noted variation in the comprehensibility across repeated questions suggests the need for further studies.

Figure: Figure 1. (A) Total mean scores for accuracy, completeness, and comprehensibility. (B) Comparison of accuracy, completeness, and comprehensibility scores between initial and repeat questions.

Note: The table for this abstract can be viewed in the ePoster Gallery section of the ACG 2024 ePoster Site or in The American Journal of Gastroenterology's abstract supplement issue, both of which will be available starting October 27, 2024.

Disclosures:

Rabih Ghazi indicated no relevant financial relationships.

Thomas Judge indicated no relevant financial relationships.

Apeksha Shah indicated no relevant financial relationships.

Christina Tofani indicated no relevant financial relationships.

Rachel Frank indicated no relevant financial relationships.

Adib Chaaya indicated no relevant financial relationships.

Krysta Contino indicated no relevant financial relationships.

Rabih Ghazi, MD1, Thomas Judge, MD2, Apeksha Shah, MD3, Christina Tofani, MD, FACG4, Rachel Frank, MD5, Adib Chaaya, MD6, Krysta Contino, MD7. P1493 - Assessing ChatGPT’s Accuracy and Reliability in Providing Dietary Counseling for Common Gastrointestinal Conditions, ACG 2024 Annual Scientific Meeting Abstracts. Philadelphia, PA: American College of Gastroenterology.