Tuesday Poster Session

Category: Colorectal Cancer Prevention

P3850 - Assessing the Efficacy of LLMs in Colonoscopy Interval Recommendations: A Comparative Study of GPT-4O and Gemini

Tuesday, October 29, 2024

10:30 AM - 4:00 PM ET

Location: Exhibit Hall E

Has Audio

Maziar Amini, MD

University of Southern California

Los Angeles, CA

Presenting Author(s)

Maziar Amini, MD1, Patrick Chang, MD1, Denis Nguyen, MD2, Jennifer Dodge, MPH2, Jennifer Phan, MD1, James Buxbaum, MD2, Ara Sahakian, MD2

1University of Southern California, Los Angeles, CA; 2Keck School of Medicine of the University of Southern California, Los Angeles, CA

Introduction: Utilization of artificial intelligence (AI) in healthcare continues to evolve, with large language models (LLMs) receiving .increased focus. This study provides updated insight in comparing the efficacy of two of the latest LLMs, GPT-4O (OpenAI) and Gemini 1.5 (Google), in recommending appropriate colonoscopy intervals in line with the United States Multi-Society Task Force (USMSTF) 2020 guidelines across diverse healthcare settings.

Methods: Our cross-sectional analysis included colonoscopy reports from a public safety-net hospital and a private tertiary medical center. This cohort of patients was used in our previous work evaluating GPT4, GPT3.5, and Google Bard and is used in our current work to provide an updated look at the latest versions. GPT-4O and Gemini 1.5 were tasked with recommending colonoscopy intervals based on USMSTF 2020 guidelines, procedure reports, and relevant pathology findings, which were entered verbatim. Their recommendations were compared to those of a guideline-directed panel.

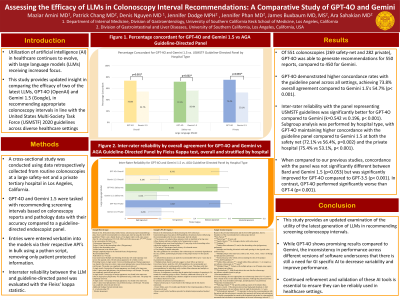

Results: Of 551 colonoscopies (269 safety-net and 282 private), GPT-4O was able to generate recommendations for 550 reports, compared to 450 for Gemini. GPT-4O demonstrated higher concordance rates with the guideline panel across all settings, achieving 73.8% overall agreement compared to Gemini 1.5's 54.7% (p< 0.001). Inter-rater reliability with the panel representing USMSTF guidelines was significantly better for GPT-4O compared to Gemini (k=0.528 vs 0.131, p< 0.001). Subgroup analysis was performed by hospital type, with GPT-4O maintaining higher concordance with the guideline panel compared to Gemini 1.5 at both the safety net (72.1% vs 56.4%, p=0.002) and the private hospital (75.4% vs 53.1%, p< 0.001). When compared to our previous studies, concordance with the panel was not significantly different between Bard and Gemini 1.5 (p=0.053) but was significantly improved for GPT-4O compared to GPT3.5 (p< 0.001). In contrast, GPT-4O performed significantly worse than GPT4 (p< 0.001).

Discussion: This study provides an updated examination of the utility of the latest generation of LLMs in recommending screening colonoscopy intervals. While GPT-4O shows promising results compared to Gemini, the inconsistency in performance across different versions of software underscores that there is still a need for GI specific AI to decrease variability and improve performance. Continued refinement and validation of these AI tools is essential to ensure they can be reliably used in healthcare settings.

Disclosures:

Maziar Amini, MD1, Patrick Chang, MD1, Denis Nguyen, MD2, Jennifer Dodge, MPH2, Jennifer Phan, MD1, James Buxbaum, MD2, Ara Sahakian, MD2. P3850 - Assessing the Efficacy of LLMs in Colonoscopy Interval Recommendations: A Comparative Study of GPT-4O and Gemini, ACG 2024 Annual Scientific Meeting Abstracts. Philadelphia, PA: American College of Gastroenterology.

1University of Southern California, Los Angeles, CA; 2Keck School of Medicine of the University of Southern California, Los Angeles, CA

Introduction: Utilization of artificial intelligence (AI) in healthcare continues to evolve, with large language models (LLMs) receiving .increased focus. This study provides updated insight in comparing the efficacy of two of the latest LLMs, GPT-4O (OpenAI) and Gemini 1.5 (Google), in recommending appropriate colonoscopy intervals in line with the United States Multi-Society Task Force (USMSTF) 2020 guidelines across diverse healthcare settings.

Methods: Our cross-sectional analysis included colonoscopy reports from a public safety-net hospital and a private tertiary medical center. This cohort of patients was used in our previous work evaluating GPT4, GPT3.5, and Google Bard and is used in our current work to provide an updated look at the latest versions. GPT-4O and Gemini 1.5 were tasked with recommending colonoscopy intervals based on USMSTF 2020 guidelines, procedure reports, and relevant pathology findings, which were entered verbatim. Their recommendations were compared to those of a guideline-directed panel.

Results: Of 551 colonoscopies (269 safety-net and 282 private), GPT-4O was able to generate recommendations for 550 reports, compared to 450 for Gemini. GPT-4O demonstrated higher concordance rates with the guideline panel across all settings, achieving 73.8% overall agreement compared to Gemini 1.5's 54.7% (p< 0.001). Inter-rater reliability with the panel representing USMSTF guidelines was significantly better for GPT-4O compared to Gemini (k=0.528 vs 0.131, p< 0.001). Subgroup analysis was performed by hospital type, with GPT-4O maintaining higher concordance with the guideline panel compared to Gemini 1.5 at both the safety net (72.1% vs 56.4%, p=0.002) and the private hospital (75.4% vs 53.1%, p< 0.001). When compared to our previous studies, concordance with the panel was not significantly different between Bard and Gemini 1.5 (p=0.053) but was significantly improved for GPT-4O compared to GPT3.5 (p< 0.001). In contrast, GPT-4O performed significantly worse than GPT4 (p< 0.001).

Discussion: This study provides an updated examination of the utility of the latest generation of LLMs in recommending screening colonoscopy intervals. While GPT-4O shows promising results compared to Gemini, the inconsistency in performance across different versions of software underscores that there is still a need for GI specific AI to decrease variability and improve performance. Continued refinement and validation of these AI tools is essential to ensure they can be reliably used in healthcare settings.

Figure: Figure 1. Percentage Concordant for GPT-4O and Gemini 1.5 vs United States Multi-Society Task Force Guideline-Directed Panel by Hospital Type

Disclosures:

Maziar Amini indicated no relevant financial relationships.

Patrick Chang indicated no relevant financial relationships.

Denis Nguyen indicated no relevant financial relationships.

Jennifer Dodge indicated no relevant financial relationships.

Jennifer Phan indicated no relevant financial relationships.

James Buxbaum indicated no relevant financial relationships.

Ara Sahakian indicated no relevant financial relationships.

Maziar Amini, MD1, Patrick Chang, MD1, Denis Nguyen, MD2, Jennifer Dodge, MPH2, Jennifer Phan, MD1, James Buxbaum, MD2, Ara Sahakian, MD2. P3850 - Assessing the Efficacy of LLMs in Colonoscopy Interval Recommendations: A Comparative Study of GPT-4O and Gemini, ACG 2024 Annual Scientific Meeting Abstracts. Philadelphia, PA: American College of Gastroenterology.