Sunday Poster Session

Category: Practice Management

P1494 - Assessment of ChatGPT-4’s Efficacy in Providing Guideline-Directed Assistance to Residents and Fellows for Critical GI Conditions in Acute Settings

Sunday, October 27, 2024

3:30 PM - 7:00 PM ET

Location: Exhibit Hall E

Has Audio

Rabih Ghazi, MD

Cooper University Hospital

Philadelphia, PA

Presenting Author(s)

Rabih Ghazi, MD1, Thomas Judge, MD2, Christina Tofani, MD, FACG3, Apeksha Shah, MD4, Adib Chaaya, MD5, Anthony Infantolino, MD6, Rachel Frank, MD7, Krysta Contino, MD8

1Cooper University Hospital, Philadelphia, PA; 2Cooper Medical School of Rowan University, Mt. Laurel, NJ; 3Digestive Health Institute at Cooper University Hospital, Camden, NJ; 4Digestive Health Institute at Cooper University Hospital, Mt. Laurel, NJ; 5Cooper Health Gastroenterology, Camden, NJ; 6MD Anderson Cooper Digestive Health, Cherry Hill, NJ; 7Cooper University Hospital, Mt. Laurel, NJ; 8Digestive Health Institute at Cooper University Hospital, Mount Laurel, NJ

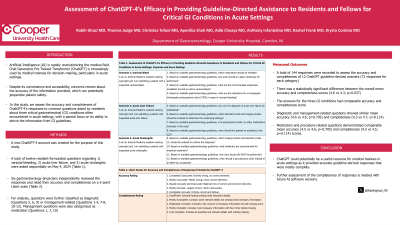

Introduction: Artificial Intelligence (AI) is rapidly revolutionizing the medical field. Chat Generative Pre-Trained Transformer (ChatGPT) is increasingly used by medical trainees for decision-making, particularly in acute settings. Despite its convenience and accessibility, concerns remain about the accuracy of the information provided, which can potentially jeopardize patient safety. In this study, we assess the accuracy and completeness of ChatGPT-4's responses to common questions asked by residents about three critical gastrointestinal (GI) conditions often encountered in acute settings, with a special focus on its ability to derive the information from GI guidelines.

Methods: A new ChatGPT-4 account was created for the purpose of this study. A total of twelve resident-formulated questions regarding 1) variceal bleeding, 2) acute liver failure, and 3) acute cholangitis were asked sequentially on May 4, 2024 (Table 1). A new chat session was used for each GI condition. Six gastroenterology physicians independently reviewed the responses and rated their accuracy and completeness on a 5-point Likert scale (Table 1). For analysis, questions were further classified as diagnostic (Questions 5, 6, 9) or management-related (Questions 1-4, 7-8, 10-12). Management questions were also categorized as medication (Questions 1, 7, 10) or procedure-related (Questions 2, 3, 4, 11, 12). Outcomes were compared using Student’s t-test or ANOVA.

Results: A total of 144 responses were recorded to assess the accuracy and completeness of 12 ChatGPT guideline-derived answers (72 responses for each category). There was a statistically significant difference between the overall mean accuracy and completeness scores (4.6 vs 4.3; p=0.037). The answers for the three GI conditions had comparable accuracy (4.4 vs 4.7 vs 4.5; p=0.129) and completeness (4.2 vs 4.4 vs 4.5; p=0.322) scores. Diagnostic and management-related questions showed similar mean accuracy (4.6 vs 4.6; p=0.705) and completeness (4.3 vs 4.7; p=0.114). Likewise, medication and procedure-related questions demonstrated comparable mean accuracy (4.5 vs 4.6; p=0.705) and completeness (4.2 vs 4.5; p=0.114) scores.

Discussion: ChatGPT could potentially be a useful resource for medical trainees in acute settings as it provided accurate guideline-derived responses that were mostly complete. Further assessment of the completeness of responses is needed with future AI software versions.

Note: The table for this abstract can be viewed in the ePoster Gallery section of the ACG 2024 ePoster Site or in The American Journal of Gastroenterology's abstract supplement issue, both of which will be available starting October 27, 2024.

Disclosures:

Rabih Ghazi, MD1, Thomas Judge, MD2, Christina Tofani, MD, FACG3, Apeksha Shah, MD4, Adib Chaaya, MD5, Anthony Infantolino, MD6, Rachel Frank, MD7, Krysta Contino, MD8. P1494 - Assessment of ChatGPT-4’s Efficacy in Providing Guideline-Directed Assistance to Residents and Fellows for Critical GI Conditions in Acute Settings, ACG 2024 Annual Scientific Meeting Abstracts. Philadelphia, PA: American College of Gastroenterology.

1Cooper University Hospital, Philadelphia, PA; 2Cooper Medical School of Rowan University, Mt. Laurel, NJ; 3Digestive Health Institute at Cooper University Hospital, Camden, NJ; 4Digestive Health Institute at Cooper University Hospital, Mt. Laurel, NJ; 5Cooper Health Gastroenterology, Camden, NJ; 6MD Anderson Cooper Digestive Health, Cherry Hill, NJ; 7Cooper University Hospital, Mt. Laurel, NJ; 8Digestive Health Institute at Cooper University Hospital, Mount Laurel, NJ

Introduction: Artificial Intelligence (AI) is rapidly revolutionizing the medical field. Chat Generative Pre-Trained Transformer (ChatGPT) is increasingly used by medical trainees for decision-making, particularly in acute settings. Despite its convenience and accessibility, concerns remain about the accuracy of the information provided, which can potentially jeopardize patient safety. In this study, we assess the accuracy and completeness of ChatGPT-4's responses to common questions asked by residents about three critical gastrointestinal (GI) conditions often encountered in acute settings, with a special focus on its ability to derive the information from GI guidelines.

Methods: A new ChatGPT-4 account was created for the purpose of this study. A total of twelve resident-formulated questions regarding 1) variceal bleeding, 2) acute liver failure, and 3) acute cholangitis were asked sequentially on May 4, 2024 (Table 1). A new chat session was used for each GI condition. Six gastroenterology physicians independently reviewed the responses and rated their accuracy and completeness on a 5-point Likert scale (Table 1). For analysis, questions were further classified as diagnostic (Questions 5, 6, 9) or management-related (Questions 1-4, 7-8, 10-12). Management questions were also categorized as medication (Questions 1, 7, 10) or procedure-related (Questions 2, 3, 4, 11, 12). Outcomes were compared using Student’s t-test or ANOVA.

Results: A total of 144 responses were recorded to assess the accuracy and completeness of 12 ChatGPT guideline-derived answers (72 responses for each category). There was a statistically significant difference between the overall mean accuracy and completeness scores (4.6 vs 4.3; p=0.037). The answers for the three GI conditions had comparable accuracy (4.4 vs 4.7 vs 4.5; p=0.129) and completeness (4.2 vs 4.4 vs 4.5; p=0.322) scores. Diagnostic and management-related questions showed similar mean accuracy (4.6 vs 4.6; p=0.705) and completeness (4.3 vs 4.7; p=0.114). Likewise, medication and procedure-related questions demonstrated comparable mean accuracy (4.5 vs 4.6; p=0.705) and completeness (4.2 vs 4.5; p=0.114) scores.

Discussion: ChatGPT could potentially be a useful resource for medical trainees in acute settings as it provided accurate guideline-derived responses that were mostly complete. Further assessment of the completeness of responses is needed with future AI software versions.

Note: The table for this abstract can be viewed in the ePoster Gallery section of the ACG 2024 ePoster Site or in The American Journal of Gastroenterology's abstract supplement issue, both of which will be available starting October 27, 2024.

Disclosures:

Rabih Ghazi indicated no relevant financial relationships.

Thomas Judge indicated no relevant financial relationships.

Christina Tofani indicated no relevant financial relationships.

Apeksha Shah indicated no relevant financial relationships.

Adib Chaaya indicated no relevant financial relationships.

Anthony Infantolino indicated no relevant financial relationships.

Rachel Frank indicated no relevant financial relationships.

Krysta Contino indicated no relevant financial relationships.

Rabih Ghazi, MD1, Thomas Judge, MD2, Christina Tofani, MD, FACG3, Apeksha Shah, MD4, Adib Chaaya, MD5, Anthony Infantolino, MD6, Rachel Frank, MD7, Krysta Contino, MD8. P1494 - Assessment of ChatGPT-4’s Efficacy in Providing Guideline-Directed Assistance to Residents and Fellows for Critical GI Conditions in Acute Settings, ACG 2024 Annual Scientific Meeting Abstracts. Philadelphia, PA: American College of Gastroenterology.