Sunday Poster Session

Category: Practice Management

P1496 - Language AI Bots as Pioneers in Diagnosis? A Retrospective Analysis on Real-time Patients at a Tertiary Care Center

Sunday, October 27, 2024

3:30 PM - 7:00 PM ET

Location: Exhibit Hall E

Has Audio

Syed Mujtaba Baqir, MD

Maimonides Medical Center

Brooklyn, NY

Presenting Author(s)

Omair Khan, MD1, Azka Naeem, Mbbs1, Syed Mujtaba Baqir, MD1, Kundan Jana, MBBS1, Avleen Kaur, 2, Morad Zaaya, MBCh1, Marlon Rivera, MD1, Fatima Sajid, MBBS1, Fizza Mohsin, MBBS1, Aung Oo, MD1, Prem Shankar, MD1, Kseniya Slobodyanyuk, MD1, Aaron Z. Tokayer, MD, MHS, FACG1, Vijay Shetty, MD1

1Maimonides Medical Center, Brooklyn, NY; 2SUNY Upstate Medical Hospital, Syracuse, NY

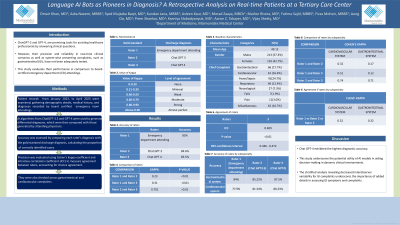

Introduction: ChatGPT-3 and GPT-4, are promising tools for assisting healthcare professionals by answering clinical questions. However, their precision and reliability in real-time clinical scenarios as well as system-wise presenting complaints, such as gastrointestinal, have not been adequately tested. This study evaluates their performance in comparison to board-certified emergency department (ED) attendings.<br><br>

Methods: Patient records from January 1, 2023, to April 30, 2023, were examined, gathering demographic details, medical history, and diagnoses recorded by board-certified emergency room attendings. AI algorithms from ChatGPT-3.5 and GPT-4 were used to generate differential diagnoses, which were then compared with those generated by attending physicians. Accuracy was assessed by comparing each rater's diagnosis with the gold standard discharge diagnosis, calculating the proportion of correctly identified cases. Precision was evaluated using Cohen's kappa coefficient and Intraclass Correlation Coefficient to measure agreement between raters, accounting for chance agreement. They were also checked across gastrointestinal and cardiovascular complaints.

Results: The average age of patients was 49.12 years, with males comprising 57.3% and females 42.7% of the population. Common chief complaints were fever/sepsis (24.7%), GI (17.7%), and cardiovascular (16.4%). Chat GPT-4 exhibited the highest accuracy at 85.5%, followed by Chat GPT 3.5 (84.6%) and ED attending (83%). Reliability analysis demonstrated a moderate agreement (0.7) between Chat GPT 3.5 and Chat GPT 4, with lower agreement observed for ED attending. The inter-rater reliability assessed by the intra-class correlation coefficient was 0.41, indicating poor agreement. The stratified analysis revealed higher accuracy in diagnosing gastrointestinal compared to cardiovascular complaints. Among raters, Chat GPT 4 exhibited the highest accuracy (87.5%) for gastrointestinal complaints, whereas Chat GPT 3.5 performed best (81.34%) for cardiovascular complaints. Overall, there was better agreement among raters for cardiovascular diagnoses compared to gastrointestinal ones.

Discussion: Chat GPT-4 exhibited the highest diagnostic accuracy. This study underscores the potential utility of AI models in aiding decision-making in dynamic clinical environments. The stratified analysis revealing decreased interobserver variability for GI complaints underscores the importance of added details in assessing GI symptoms and complaints.

Disclosures:

Omair Khan, MD1, Azka Naeem, Mbbs1, Syed Mujtaba Baqir, MD1, Kundan Jana, MBBS1, Avleen Kaur, 2, Morad Zaaya, MBCh1, Marlon Rivera, MD1, Fatima Sajid, MBBS1, Fizza Mohsin, MBBS1, Aung Oo, MD1, Prem Shankar, MD1, Kseniya Slobodyanyuk, MD1, Aaron Z. Tokayer, MD, MHS, FACG1, Vijay Shetty, MD1. P1496 - Language AI Bots as Pioneers in Diagnosis? A Retrospective Analysis on Real-time Patients at a Tertiary Care Center, ACG 2024 Annual Scientific Meeting Abstracts. Philadelphia, PA: American College of Gastroenterology.

1Maimonides Medical Center, Brooklyn, NY; 2SUNY Upstate Medical Hospital, Syracuse, NY

Introduction: ChatGPT-3 and GPT-4, are promising tools for assisting healthcare professionals by answering clinical questions. However, their precision and reliability in real-time clinical scenarios as well as system-wise presenting complaints, such as gastrointestinal, have not been adequately tested. This study evaluates their performance in comparison to board-certified emergency department (ED) attendings.<br><br>

Methods: Patient records from January 1, 2023, to April 30, 2023, were examined, gathering demographic details, medical history, and diagnoses recorded by board-certified emergency room attendings. AI algorithms from ChatGPT-3.5 and GPT-4 were used to generate differential diagnoses, which were then compared with those generated by attending physicians. Accuracy was assessed by comparing each rater's diagnosis with the gold standard discharge diagnosis, calculating the proportion of correctly identified cases. Precision was evaluated using Cohen's kappa coefficient and Intraclass Correlation Coefficient to measure agreement between raters, accounting for chance agreement. They were also checked across gastrointestinal and cardiovascular complaints.

Results: The average age of patients was 49.12 years, with males comprising 57.3% and females 42.7% of the population. Common chief complaints were fever/sepsis (24.7%), GI (17.7%), and cardiovascular (16.4%). Chat GPT-4 exhibited the highest accuracy at 85.5%, followed by Chat GPT 3.5 (84.6%) and ED attending (83%). Reliability analysis demonstrated a moderate agreement (0.7) between Chat GPT 3.5 and Chat GPT 4, with lower agreement observed for ED attending. The inter-rater reliability assessed by the intra-class correlation coefficient was 0.41, indicating poor agreement. The stratified analysis revealed higher accuracy in diagnosing gastrointestinal compared to cardiovascular complaints. Among raters, Chat GPT 4 exhibited the highest accuracy (87.5%) for gastrointestinal complaints, whereas Chat GPT 3.5 performed best (81.34%) for cardiovascular complaints. Overall, there was better agreement among raters for cardiovascular diagnoses compared to gastrointestinal ones.

Discussion: Chat GPT-4 exhibited the highest diagnostic accuracy. This study underscores the potential utility of AI models in aiding decision-making in dynamic clinical environments. The stratified analysis revealing decreased interobserver variability for GI complaints underscores the importance of added details in assessing GI symptoms and complaints.

Disclosures:

Omair Khan indicated no relevant financial relationships.

Azka Naeem indicated no relevant financial relationships.

Syed Mujtaba Baqir indicated no relevant financial relationships.

Kundan Jana indicated no relevant financial relationships.

Avleen Kaur indicated no relevant financial relationships.

Morad Zaaya indicated no relevant financial relationships.

Marlon Rivera indicated no relevant financial relationships.

Fatima Sajid indicated no relevant financial relationships.

Fizza Mohsin indicated no relevant financial relationships.

Aung Oo indicated no relevant financial relationships.

Prem Shankar indicated no relevant financial relationships.

Kseniya Slobodyanyuk indicated no relevant financial relationships.

Aaron Tokayer indicated no relevant financial relationships.

Vijay Shetty indicated no relevant financial relationships.

Omair Khan, MD1, Azka Naeem, Mbbs1, Syed Mujtaba Baqir, MD1, Kundan Jana, MBBS1, Avleen Kaur, 2, Morad Zaaya, MBCh1, Marlon Rivera, MD1, Fatima Sajid, MBBS1, Fizza Mohsin, MBBS1, Aung Oo, MD1, Prem Shankar, MD1, Kseniya Slobodyanyuk, MD1, Aaron Z. Tokayer, MD, MHS, FACG1, Vijay Shetty, MD1. P1496 - Language AI Bots as Pioneers in Diagnosis? A Retrospective Analysis on Real-time Patients at a Tertiary Care Center, ACG 2024 Annual Scientific Meeting Abstracts. Philadelphia, PA: American College of Gastroenterology.