Sunday Poster Session

Category: Practice Management

P1501 - Comparative Evaluation of Various Artificially Intelligent Chatbots for Management Recommendations of Common Gastroenterology Diseases

Sunday, October 27, 2024

3:30 PM - 7:00 PM ET

Location: Exhibit Hall E

Has Audio

Daniel Willcockson, MD, MPH

University of Wisconsin Hospitals and Clinics

Fitchburg, WI

Presenting Author(s)

Daniel Willcockson, MD, MPH

University of Wisconsin Hospitals and Clinics, Fitchburg, WI

Introduction: The integration of artificial intelligence (AI) in healthcare, particularly within gastroenterology, holds the potential to significantly enhance diagnostic accuracy, patient management, and clinical workflows. Advanced AI tools such as ChatGPT-4o, Gemini Pro, and Perplexity Pro are capable of interpreting endoscopic images, analyzing clinical data, and automating various administrative tasks. These advancements can streamline medical procedures and personalize treatment plans.

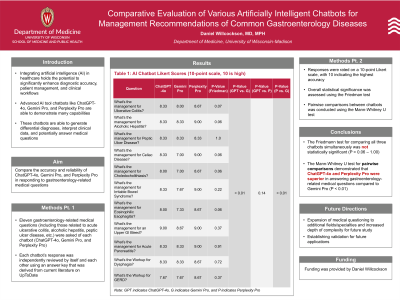

Methods: This study aimed to compare the accuracy and reliability of ChatGPT-4o, Gemini Pro, and Perplexity Pro in responding to gastroenterology-related medical management questions, including those related to ulcerative colitis, alcoholic hepatitis, peptic ulcer disease, and others. Each chatbot response was independently reviewed by itself and each other using an answer key derived from current literature on UpToDate. Responses were rated on a 10-point Likert scale, with 10 indicating the highest accuracy. The overall statistical significance was assessed using the Friedman test, while pairwise comparisons between chatbots were conducted using the Mann-Whitney U test.

Results: The analysis showed no significant difference in the chatbots' abilities to answer medical management questions accurately and reliably when using the Friedman test (P = 0.06 – 1.0). Despite the lack of statistical significance, Perplexity Pro consistently achieved higher Likert scores compared to ChatGPT-4o and Gemini Pro. When conducting pairwise comparisons, however, The Mann-Whitney U test revealed significant differences between ChatGPT-4o and Perplexity Pro (P = 0.001) and between Gemini Pro and Perplexity Pro (P = 0.0003).

Discussion: AI chatbots like ChatGPT-4o, Gemini Pro, and Perplexity Pro demonstrate substantial potential in enhancing gastroenterology practices. While the Friedman test showed no significant difference (P = 0.06 – 1.0), pairwise comparisons revealed Perplexity Pro's superior performance in answering gastroenterology-related medical questions.

The comparable performance among these chatbots, however, underscores the necessity for continued development and evaluation to ensure their effectiveness in clinical settings. Future studies should focus on refining these AI tools and exploring their integration into clinical practice, such as answering patient portal messages.

Of note, AI was used in the development of this abstract.

Note: The table for this abstract can be viewed in the ePoster Gallery section of the ACG 2024 ePoster Site or in The American Journal of Gastroenterology's abstract supplement issue, both of which will be available starting October 27, 2024.

Disclosures:

Daniel Willcockson, MD, MPH. P1501 - Comparative Evaluation of Various Artificially Intelligent Chatbots for Management Recommendations of Common Gastroenterology Diseases, ACG 2024 Annual Scientific Meeting Abstracts. Philadelphia, PA: American College of Gastroenterology.

University of Wisconsin Hospitals and Clinics, Fitchburg, WI

Introduction: The integration of artificial intelligence (AI) in healthcare, particularly within gastroenterology, holds the potential to significantly enhance diagnostic accuracy, patient management, and clinical workflows. Advanced AI tools such as ChatGPT-4o, Gemini Pro, and Perplexity Pro are capable of interpreting endoscopic images, analyzing clinical data, and automating various administrative tasks. These advancements can streamline medical procedures and personalize treatment plans.

Methods: This study aimed to compare the accuracy and reliability of ChatGPT-4o, Gemini Pro, and Perplexity Pro in responding to gastroenterology-related medical management questions, including those related to ulcerative colitis, alcoholic hepatitis, peptic ulcer disease, and others. Each chatbot response was independently reviewed by itself and each other using an answer key derived from current literature on UpToDate. Responses were rated on a 10-point Likert scale, with 10 indicating the highest accuracy. The overall statistical significance was assessed using the Friedman test, while pairwise comparisons between chatbots were conducted using the Mann-Whitney U test.

Results: The analysis showed no significant difference in the chatbots' abilities to answer medical management questions accurately and reliably when using the Friedman test (P = 0.06 – 1.0). Despite the lack of statistical significance, Perplexity Pro consistently achieved higher Likert scores compared to ChatGPT-4o and Gemini Pro. When conducting pairwise comparisons, however, The Mann-Whitney U test revealed significant differences between ChatGPT-4o and Perplexity Pro (P = 0.001) and between Gemini Pro and Perplexity Pro (P = 0.0003).

Discussion: AI chatbots like ChatGPT-4o, Gemini Pro, and Perplexity Pro demonstrate substantial potential in enhancing gastroenterology practices. While the Friedman test showed no significant difference (P = 0.06 – 1.0), pairwise comparisons revealed Perplexity Pro's superior performance in answering gastroenterology-related medical questions.

The comparable performance among these chatbots, however, underscores the necessity for continued development and evaluation to ensure their effectiveness in clinical settings. Future studies should focus on refining these AI tools and exploring their integration into clinical practice, such as answering patient portal messages.

Of note, AI was used in the development of this abstract.

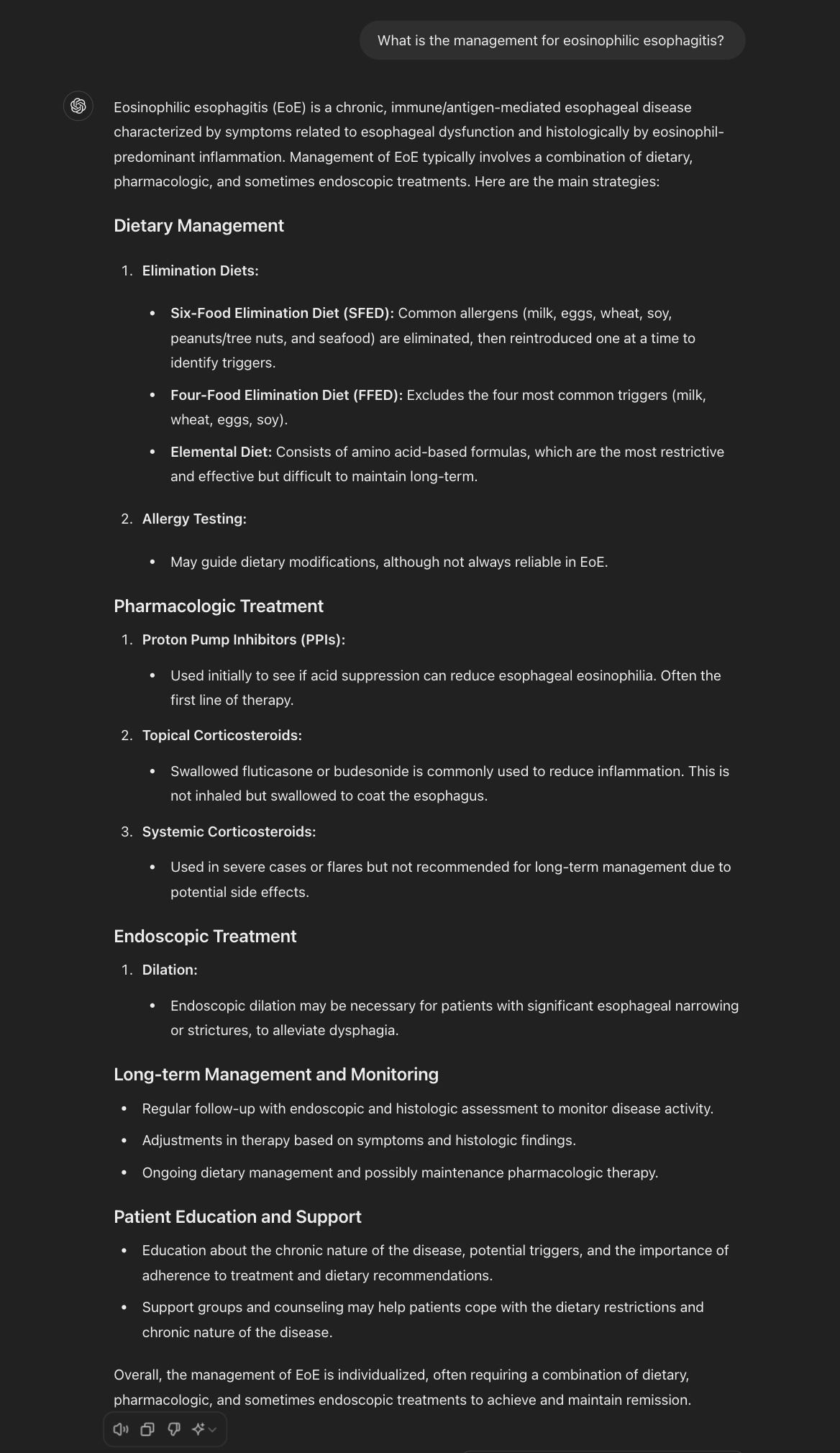

Figure: ChatGPT-4o's response to "what is the management for eosinophilic esophagitis?"

Note: The table for this abstract can be viewed in the ePoster Gallery section of the ACG 2024 ePoster Site or in The American Journal of Gastroenterology's abstract supplement issue, both of which will be available starting October 27, 2024.

Disclosures:

Daniel Willcockson indicated no relevant financial relationships.

Daniel Willcockson, MD, MPH. P1501 - Comparative Evaluation of Various Artificially Intelligent Chatbots for Management Recommendations of Common Gastroenterology Diseases, ACG 2024 Annual Scientific Meeting Abstracts. Philadelphia, PA: American College of Gastroenterology.