Tuesday Poster Session

Category: Biliary/Pancreas

P3477 - Assessing the Ability of ChatGPT 4.0 as an Educational Tool for Patients With Pancreatic Cysts

Tuesday, October 29, 2024

10:30 AM - 4:00 PM ET

Location: Exhibit Hall E

Has Audio

Frances Dang, MD

University of California, Irvine

Orange, CA

Presenting Author(s)

Award: Presidential Poster Award

Frances Dang, MD1, Shoujit Banerjee, MD2, Andy L. Lin, MD1, Trevor McCracken, MD2, Joshua Kwon, MD2, Amirali Tavangar, MD1, David L. Cheung, MD1, Peter H. Nguyen, MD1, Jason Samarasena, MD1

1University of California, Irvine, Orange, CA; 2University of California Irvine, Orange, CA

Introduction: As imaging technology continues to improve, pancreatic cysts are becoming increasingly diagnosed. ChatGPT is an artificial intelligence (AI) large language model (LLM) designed for conversational and interactive dialogue. Patients newly diagnosed with pancreatic cysts often have specific questions to inquire about their condition. Our study aims to evaluate the ability of ChatGPT 4.0 in providing high-quality, medically accurate, and comprehensible responses to common patient questions about pancreatic cysts.

Methods: 14 common FAQs regarding pancreatic cysts were compiled and entered into ChatGPT 4.0 to generate responses. Three board-certified attending gastroenterologists with expertise in management of pancreatic cysts were recruited to produce written responses to the FAQs. Both sets of answers were then anonymized, randomized and distributed to a group of three blinded patients with diagnosis of pancreatic cyst. Using a 5-point Likert scale, the patients were asked to rate responses based on quality, clarity, and empathy, and to indicate which of the two responses they preferred. Furthermore, respondents were asked to designate if each answer was physician or AI-generated.

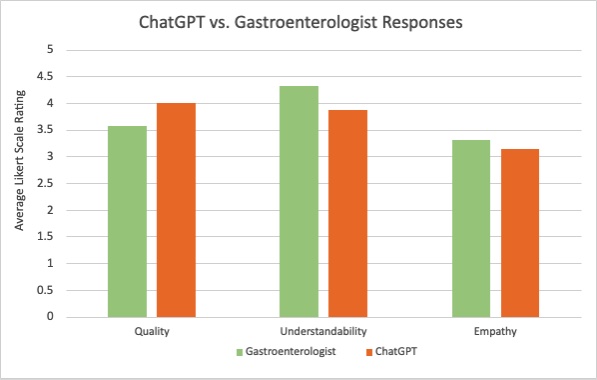

Results: When comparing quality of responses, there was no significant difference in the scores between gastroenterologists (3.57) and ChatGPT (4.00), p = 0.056. The patient panel found gastroenterologist responses easier to understand (4.31) compared to ChatGPT responses (3.86), p = 0.0159. There was no significant difference in empathy scores between the physician responses (3.31) and ChatGPT responses (3.14) with p = 0.18. Patients preferred ChatGPT responses 57% of the time. The panel was able to correctly identify AI responses in most cases (83.3%).

Discussion: The burden on physicians with answering in-basket messages from patients is growing larger. Responding to questions about a new diagnosis of pancreatic cysts from a patient can be especially time consuming. This study showed that ChatGPT was able to generate responses to common questions surrounding pancreatic cysts of similar quality and empathy to those of human gastroenterologists. In fact, patients in this study preferred the ChatGPT responses over gastroenterologist responses. As LLMs continue to evolve, this current iteration of ChatGPT may already have the potential to be utilized in specific clinical scenarios, such as pancreatic cysts, to streamline patient communication and to help educate patients about their diagnosis.

Disclosures:

Frances Dang, MD1, Shoujit Banerjee, MD2, Andy L. Lin, MD1, Trevor McCracken, MD2, Joshua Kwon, MD2, Amirali Tavangar, MD1, David L. Cheung, MD1, Peter H. Nguyen, MD1, Jason Samarasena, MD1. P3477 - Assessing the Ability of ChatGPT 4.0 as an Educational Tool for Patients With Pancreatic Cysts, ACG 2024 Annual Scientific Meeting Abstracts. Philadelphia, PA: American College of Gastroenterology.

Frances Dang, MD1, Shoujit Banerjee, MD2, Andy L. Lin, MD1, Trevor McCracken, MD2, Joshua Kwon, MD2, Amirali Tavangar, MD1, David L. Cheung, MD1, Peter H. Nguyen, MD1, Jason Samarasena, MD1

1University of California, Irvine, Orange, CA; 2University of California Irvine, Orange, CA

Introduction: As imaging technology continues to improve, pancreatic cysts are becoming increasingly diagnosed. ChatGPT is an artificial intelligence (AI) large language model (LLM) designed for conversational and interactive dialogue. Patients newly diagnosed with pancreatic cysts often have specific questions to inquire about their condition. Our study aims to evaluate the ability of ChatGPT 4.0 in providing high-quality, medically accurate, and comprehensible responses to common patient questions about pancreatic cysts.

Methods: 14 common FAQs regarding pancreatic cysts were compiled and entered into ChatGPT 4.0 to generate responses. Three board-certified attending gastroenterologists with expertise in management of pancreatic cysts were recruited to produce written responses to the FAQs. Both sets of answers were then anonymized, randomized and distributed to a group of three blinded patients with diagnosis of pancreatic cyst. Using a 5-point Likert scale, the patients were asked to rate responses based on quality, clarity, and empathy, and to indicate which of the two responses they preferred. Furthermore, respondents were asked to designate if each answer was physician or AI-generated.

Results: When comparing quality of responses, there was no significant difference in the scores between gastroenterologists (3.57) and ChatGPT (4.00), p = 0.056. The patient panel found gastroenterologist responses easier to understand (4.31) compared to ChatGPT responses (3.86), p = 0.0159. There was no significant difference in empathy scores between the physician responses (3.31) and ChatGPT responses (3.14) with p = 0.18. Patients preferred ChatGPT responses 57% of the time. The panel was able to correctly identify AI responses in most cases (83.3%).

Discussion: The burden on physicians with answering in-basket messages from patients is growing larger. Responding to questions about a new diagnosis of pancreatic cysts from a patient can be especially time consuming. This study showed that ChatGPT was able to generate responses to common questions surrounding pancreatic cysts of similar quality and empathy to those of human gastroenterologists. In fact, patients in this study preferred the ChatGPT responses over gastroenterologist responses. As LLMs continue to evolve, this current iteration of ChatGPT may already have the potential to be utilized in specific clinical scenarios, such as pancreatic cysts, to streamline patient communication and to help educate patients about their diagnosis.

Figure: Figure A. Comparison between ChatGPT vs. Gastroenterologist Responses.

Disclosures:

Frances Dang indicated no relevant financial relationships.

Shoujit Banerjee indicated no relevant financial relationships.

Andy Lin indicated no relevant financial relationships.

Trevor McCracken indicated no relevant financial relationships.

Joshua Kwon indicated no relevant financial relationships.

Amirali Tavangar indicated no relevant financial relationships.

David Cheung indicated no relevant financial relationships.

Peter Nguyen indicated no relevant financial relationships.

Jason Samarasena: Cook Medical – Consultant. Medtronic – Advisory Committee/Board Member, Consultant. Neptune Medical – Advisory Committee/Board Member, Consultant. Olympus – Advisory Committee/Board Member, Consultant. Ovesco – Advisory Committee/Board Member, Consultant, Speakers Bureau. SatisfAI – Stock-privately held company. Steris – Advisory Committee/Board Member.

Frances Dang, MD1, Shoujit Banerjee, MD2, Andy L. Lin, MD1, Trevor McCracken, MD2, Joshua Kwon, MD2, Amirali Tavangar, MD1, David L. Cheung, MD1, Peter H. Nguyen, MD1, Jason Samarasena, MD1. P3477 - Assessing the Ability of ChatGPT 4.0 as an Educational Tool for Patients With Pancreatic Cysts, ACG 2024 Annual Scientific Meeting Abstracts. Philadelphia, PA: American College of Gastroenterology.