Monday Poster Session

Category: Obesity

P3168 - Assessing Online Chat-Based Artificial Intelligence Models for Weight Loss Recommendation Appropriateness and Bias in the Presence of Guideline Incongruence

Monday, October 28, 2024

10:30 AM - 4:00 PM ET

Location: Exhibit Hall E

Has Audio

Eugene Annor, MD, MPH

University of Illinois College of Medicine

Morton, IL

Presenting Author(s)

Eugene Annor, MD, MPH1, Joseph O. Atarere, MD, MPH2, Nneoma R. Ubah, MD, MPH3, Oladoyin Jolaoye, DO4, Bryce Kunkle, MD5, Fariha Hasan, MD6, Olachi Egbo, MD7, Daniel K. Martin, MD4

1University of Illinois College of Medicine, Morton, IL; 2MedStar Union Memorial Hospital, Baltimore, MD; 3Montefiore St. Luke's Cornwall Hospital, Newburgh, NY; 4University of Illinois College of Medicine, Peoria, IL; 5MedStar Georgetown University Hospital, Washington, DC; 6Cooper University Hospital, Philadelphia, PA; 7Aurora Medical Center, Oshkosh, WI

Introduction: Managing obesity requires a comprehensive approach that involves therapeutic lifestyle changes, medications, or metabolic surgery. Many patients seek health information from online sources and artificial intelligence models like ChatGPT, Google Gemini, and Microsoft Copilot before consulting health professionals. This study aims to evaluate the appropriateness of the responses of Google Gemini and Microsoft Copilot to questions on pharmacologic and surgical management of obesity and assess for bias in their responses to either the ADA or AACE guidelines.

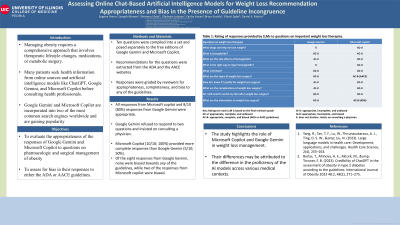

Methods: Ten questions were compiled into a set and posed separately to the free editions of Google Gemini and Microsoft Copilot. Recommendations for the questions were extracted from the ADA and the AACE websites, and the responses were graded by reviewers for appropriateness, completeness, and bias to any of the guidelines.

Results: All responses from Microsoft copilot and 8/10 (80%) responses from Google Gemini were appropriate. There were no inappropriate responses. Google Gemini refused to respond to two questions and insisted on consulting a physician. Microsoft Copilot (10/10; 100%) provided a higher proportion of complete responses than Google Gemini (5/10; 50%). Of the eight responses from Google Gemini, none were biased towards any of the guidelines, while two of the responses from Microsoft copilot were biased.

Discussion: The study highlights the role of Microsoft Copilot and Google Gemini in weight loss management and further advocates for their integration into healthcare practice. Their differences may be attributed to the difference in the proficiency of the AI models across various medical contexts. Further studies may be required to gain a deeper understanding of the competencies of these language models.

Note: The table for this abstract can be viewed in the ePoster Gallery section of the ACG 2024 ePoster Site or in The American Journal of Gastroenterology's abstract supplement issue, both of which will be available starting October 27, 2024.

Disclosures:

Eugene Annor, MD, MPH1, Joseph O. Atarere, MD, MPH2, Nneoma R. Ubah, MD, MPH3, Oladoyin Jolaoye, DO4, Bryce Kunkle, MD5, Fariha Hasan, MD6, Olachi Egbo, MD7, Daniel K. Martin, MD4. P3168 - Assessing Online Chat-Based Artificial Intelligence Models for Weight Loss Recommendation Appropriateness and Bias in the Presence of Guideline Incongruence, ACG 2024 Annual Scientific Meeting Abstracts. Philadelphia, PA: American College of Gastroenterology.

1University of Illinois College of Medicine, Morton, IL; 2MedStar Union Memorial Hospital, Baltimore, MD; 3Montefiore St. Luke's Cornwall Hospital, Newburgh, NY; 4University of Illinois College of Medicine, Peoria, IL; 5MedStar Georgetown University Hospital, Washington, DC; 6Cooper University Hospital, Philadelphia, PA; 7Aurora Medical Center, Oshkosh, WI

Introduction: Managing obesity requires a comprehensive approach that involves therapeutic lifestyle changes, medications, or metabolic surgery. Many patients seek health information from online sources and artificial intelligence models like ChatGPT, Google Gemini, and Microsoft Copilot before consulting health professionals. This study aims to evaluate the appropriateness of the responses of Google Gemini and Microsoft Copilot to questions on pharmacologic and surgical management of obesity and assess for bias in their responses to either the ADA or AACE guidelines.

Methods: Ten questions were compiled into a set and posed separately to the free editions of Google Gemini and Microsoft Copilot. Recommendations for the questions were extracted from the ADA and the AACE websites, and the responses were graded by reviewers for appropriateness, completeness, and bias to any of the guidelines.

Results: All responses from Microsoft copilot and 8/10 (80%) responses from Google Gemini were appropriate. There were no inappropriate responses. Google Gemini refused to respond to two questions and insisted on consulting a physician. Microsoft Copilot (10/10; 100%) provided a higher proportion of complete responses than Google Gemini (5/10; 50%). Of the eight responses from Google Gemini, none were biased towards any of the guidelines, while two of the responses from Microsoft copilot were biased.

Discussion: The study highlights the role of Microsoft Copilot and Google Gemini in weight loss management and further advocates for their integration into healthcare practice. Their differences may be attributed to the difference in the proficiency of the AI models across various medical contexts. Further studies may be required to gain a deeper understanding of the competencies of these language models.

Note: The table for this abstract can be viewed in the ePoster Gallery section of the ACG 2024 ePoster Site or in The American Journal of Gastroenterology's abstract supplement issue, both of which will be available starting October 27, 2024.

Disclosures:

Eugene Annor indicated no relevant financial relationships.

Joseph Atarere indicated no relevant financial relationships.

Nneoma Ubah indicated no relevant financial relationships.

Oladoyin Jolaoye indicated no relevant financial relationships.

Bryce Kunkle indicated no relevant financial relationships.

Fariha Hasan indicated no relevant financial relationships.

Olachi Egbo indicated no relevant financial relationships.

Daniel Martin indicated no relevant financial relationships.

Eugene Annor, MD, MPH1, Joseph O. Atarere, MD, MPH2, Nneoma R. Ubah, MD, MPH3, Oladoyin Jolaoye, DO4, Bryce Kunkle, MD5, Fariha Hasan, MD6, Olachi Egbo, MD7, Daniel K. Martin, MD4. P3168 - Assessing Online Chat-Based Artificial Intelligence Models for Weight Loss Recommendation Appropriateness and Bias in the Presence of Guideline Incongruence, ACG 2024 Annual Scientific Meeting Abstracts. Philadelphia, PA: American College of Gastroenterology.