Monday Poster Session

Category: Colon

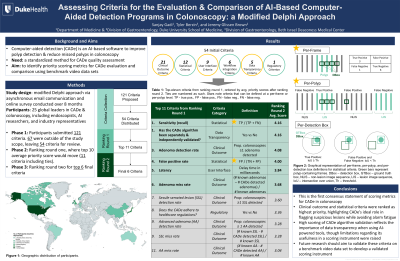

P1939 - Standardizing Criteria for the Comparison of AI-Based Computer-Aided Detection Programs in Colonoscopy: A Modified Delphi Approach

Monday, October 28, 2024

10:30 AM - 4:00 PM ET

Location: Exhibit Hall E

Has Audio

Sanjay Gadi, MD

Duke University Medical Center

Durham, NC

Presenting Author(s)

Sanjay Gadi, MD1, Jeremy Glissen Brown, MD, MSc1, Tyler M. Berzin, MD2

1Duke University Medical Center, Durham, NC; 2Beth Israel Deaconess Medical Center, Harvard Medical School, Boston, MA

Introduction: Artificial intelligence (AI)-based computer-aided detection (CADe) software may improve colorectal cancer outcomes by increasing adenoma detection while reducing miss rates during colonoscopy. As CADe programs are developed and refined, there is a need for standardized assessment of CADe performance. We aimed to identify priority scoring metrics by which CADe can be evaluated and compared using benchmark video data sets.

Methods: A modified Delphi approach for consensus building was used. Twenty-five global leaders in CADe—including endoscopists, researchers, and industry representatives—participated. During Phase 1, participants submitted criteria that were distributed for review and open comment via asynchronous email communication. In Phase 2, participants ranked criteria on a five-point Likert scale from lowest to highest priority, and the top 10 criteria were identified (11, inclusive of one tie). In Phase 3, a final round of review and ranking was completed, yielding the final 6 criteria (Table 1).

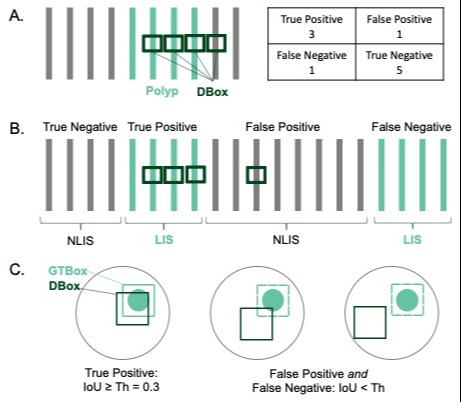

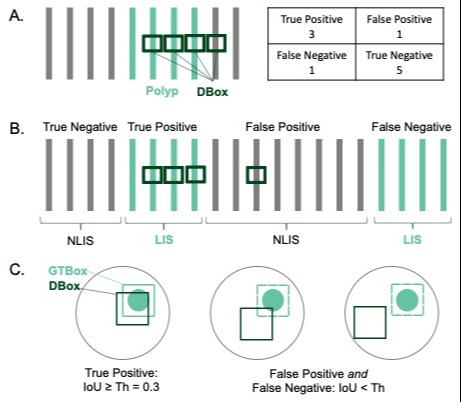

Results: Participants proposed 54 initial criteria. Round one had a 96% (n=24) response rate, with mean scores ranging from 2.25 to 4.38. Criteria spanned clinical outcome (n=21), statistical (12), user interface (9), workflow integration (6), data transparency (5), and regulatory (1) domains. Ranking round two had a 100% response rate. The final six criteria were 1) sensitivity (average = 4.16), 2) separate & independent CADe algorithm validation (4.16), 3) adenoma detection rate (4.08), 4) false positive rate (4.00), 5) latency (3.84), and 6) adenoma miss rate (3.68). Sensitivity and false positive rate were defined at the per-frame or per-polyp level (graphically represented in Figure 1), and latency as delay time in milliseconds.

Discussion: We present the first international consensus statement of scoring metrics for CADe in colonoscopy. Clinical outcome and statistical criteria were ranked as highest priority, highlighting CADe’s ideal role in flagging lesions while avoiding alarm fatigue. High scoring of CADe algorithm validation reflects the importance of data transparency when using AI-based tools, though limitations regarding its usefulness in a scoring instrument were raised. Future research should aim to test these criteria on a benchmark video data set to develop a validated scoring instrument capable of assessing CADe performance. These scoring criteria should inform CADe algorithm development, institutional uptake, society recommendations, and FDA review.

Note: The table for this abstract can be viewed in the ePoster Gallery section of the ACG 2024 ePoster Site or in The American Journal of Gastroenterology's abstract supplement issue, both of which will be available starting October 27, 2024.

Disclosures:

Sanjay Gadi, MD1, Jeremy Glissen Brown, MD, MSc1, Tyler M. Berzin, MD2. P1939 - Standardizing Criteria for the Comparison of AI-Based Computer-Aided Detection Programs in Colonoscopy: A Modified Delphi Approach, ACG 2024 Annual Scientific Meeting Abstracts. Philadelphia, PA: American College of Gastroenterology.

1Duke University Medical Center, Durham, NC; 2Beth Israel Deaconess Medical Center, Harvard Medical School, Boston, MA

Introduction: Artificial intelligence (AI)-based computer-aided detection (CADe) software may improve colorectal cancer outcomes by increasing adenoma detection while reducing miss rates during colonoscopy. As CADe programs are developed and refined, there is a need for standardized assessment of CADe performance. We aimed to identify priority scoring metrics by which CADe can be evaluated and compared using benchmark video data sets.

Methods: A modified Delphi approach for consensus building was used. Twenty-five global leaders in CADe—including endoscopists, researchers, and industry representatives—participated. During Phase 1, participants submitted criteria that were distributed for review and open comment via asynchronous email communication. In Phase 2, participants ranked criteria on a five-point Likert scale from lowest to highest priority, and the top 10 criteria were identified (11, inclusive of one tie). In Phase 3, a final round of review and ranking was completed, yielding the final 6 criteria (Table 1).

Results: Participants proposed 54 initial criteria. Round one had a 96% (n=24) response rate, with mean scores ranging from 2.25 to 4.38. Criteria spanned clinical outcome (n=21), statistical (12), user interface (9), workflow integration (6), data transparency (5), and regulatory (1) domains. Ranking round two had a 100% response rate. The final six criteria were 1) sensitivity (average = 4.16), 2) separate & independent CADe algorithm validation (4.16), 3) adenoma detection rate (4.08), 4) false positive rate (4.00), 5) latency (3.84), and 6) adenoma miss rate (3.68). Sensitivity and false positive rate were defined at the per-frame or per-polyp level (graphically represented in Figure 1), and latency as delay time in milliseconds.

Discussion: We present the first international consensus statement of scoring metrics for CADe in colonoscopy. Clinical outcome and statistical criteria were ranked as highest priority, highlighting CADe’s ideal role in flagging lesions while avoiding alarm fatigue. High scoring of CADe algorithm validation reflects the importance of data transparency when using AI-based tools, though limitations regarding its usefulness in a scoring instrument were raised. Future research should aim to test these criteria on a benchmark video data set to develop a validated scoring instrument capable of assessing CADe performance. These scoring criteria should inform CADe algorithm development, institutional uptake, society recommendations, and FDA review.

Figure: Figure 1: Graphic representation of A) per-frame, B) per-polyp, and C) per-detection box definitions for statistical criteria. Green bars represent polyp-containing frames. DBox – detection box, GTBox – ground truth box, NLIS – non-lesion image sequence, LIS – lesion image sequence, IoU – intersection over union, Th – threshold.

Note: The table for this abstract can be viewed in the ePoster Gallery section of the ACG 2024 ePoster Site or in The American Journal of Gastroenterology's abstract supplement issue, both of which will be available starting October 27, 2024.

Disclosures:

Sanjay Gadi indicated no relevant financial relationships.

Jeremy Glissen Brown: Medtronic – Consultant. Odin Vision – Advisory Committee/Board Member. Olympus – Consultant.

Tyler Berzin: Boston Scientific – Consultant. Magentiq Eye – Consultant. Medtronic – Consultant. RSIP Vision – Consultant. Wision AI – Consultant.

Sanjay Gadi, MD1, Jeremy Glissen Brown, MD, MSc1, Tyler M. Berzin, MD2. P1939 - Standardizing Criteria for the Comparison of AI-Based Computer-Aided Detection Programs in Colonoscopy: A Modified Delphi Approach, ACG 2024 Annual Scientific Meeting Abstracts. Philadelphia, PA: American College of Gastroenterology.