Sunday Poster Session

Category: Obesity

P1452 - Analysis of Provider Preferences for AI-Generated Responses to Anti-Obesity Treatments

Sunday, October 27, 2024

3:30 PM - 7:00 PM ET

Location: Exhibit Hall E

Has Audio

Thomas W. Fredrick, MD

Mayo Clinic

Rochester, MN

Presenting Author(s)

Thomas W. Fredrick, MD, Manik Aggarwal, MBBS, Andres Acosta, MD, PhD

Mayo Clinic, Rochester, MN

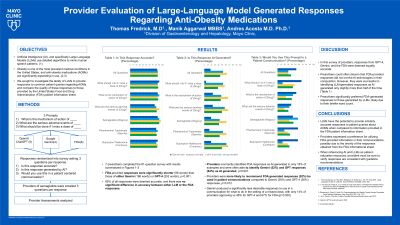

Introduction: Anti-obesity medications (AOMs) are rapidly expanding in use given the high prevalence of obesity. Artificial intelligence (AI) large language models (LLMs) use algorithms to mimic human speech and have the potential to help providers answer patient queries about their medications. We compared the quality of LLM-generated responses to patient queries regarding AOMs to those provided by the United States Food and Drug Administration (FDA) patient information sheet.

Methods: We prompted 2 different LLMs, Gemini (Google) and GPT-4 (OpenAI) in April 2024 with prompts regarding different AOMs: semaglutide (Wegovy), phentermine/topiramate (Qsymia), and bupropion/naltrexone (Contrave). Questions involved the mechanism of action, the serious adverse effects, and management of a missed dose. A randomized survey of responses from both LLMs and the FDA patient information sheet was sent to providers from a single institution with a history of prescribing semaglutide. Providers could mark each response as AI generated, accurate, and acceptable for use in patient communication. Survey responses were analyzed in Bluesky statistics software v10.3 using ANOVA analysis.

Results: 7 prescribers completed the 81-question survey with results summarized in Table 1. FDA provided responses were significantly shorter (99 words) than those of either Gemini (186 words) or GPT-4 (252 words), p< 0.001. 85% of all responses were deemed accurate, and there was no significant difference between LLM and FDA responses. Providers incorrectly identified FDA responses as AI-generated in 18% of examples and were often able to identify Gemini (52%) and GPT responses (64%) as AI generated, p< 0.001. Providers were more likely to recommend FDA generated responses (52%) be used in patient communications compared to Gemini (30%) and GPT-4 (30%) responses, p=0.012. Subgroup analysis revealed no significant differences in respondent preferences when accounting for question type or anti-obesity medication.

Discussion: We found that providers evaluating AI and FDA –generated responses to AOM queries found that both sources were equally accurate, and providers could often distinguish FDA-provided responses from AI-generated content. Providers preferred FDA-generated responses in patient communications, possibly due to their reduced word count. When clinicians seek to utilize LLMs in their practice, prompting them to be brief in their responses may optimize the ability to incorporate LLMs into clinical practice.

Note: The table for this abstract can be viewed in the ePoster Gallery section of the ACG 2024 ePoster Site or in The American Journal of Gastroenterology's abstract supplement issue, both of which will be available starting October 27, 2024.

Disclosures:

Thomas W. Fredrick, MD, Manik Aggarwal, MBBS, Andres Acosta, MD, PhD. P1452 - Analysis of Provider Preferences for AI-Generated Responses to Anti-Obesity Treatments, ACG 2024 Annual Scientific Meeting Abstracts. Philadelphia, PA: American College of Gastroenterology.

Mayo Clinic, Rochester, MN

Introduction: Anti-obesity medications (AOMs) are rapidly expanding in use given the high prevalence of obesity. Artificial intelligence (AI) large language models (LLMs) use algorithms to mimic human speech and have the potential to help providers answer patient queries about their medications. We compared the quality of LLM-generated responses to patient queries regarding AOMs to those provided by the United States Food and Drug Administration (FDA) patient information sheet.

Methods: We prompted 2 different LLMs, Gemini (Google) and GPT-4 (OpenAI) in April 2024 with prompts regarding different AOMs: semaglutide (Wegovy), phentermine/topiramate (Qsymia), and bupropion/naltrexone (Contrave). Questions involved the mechanism of action, the serious adverse effects, and management of a missed dose. A randomized survey of responses from both LLMs and the FDA patient information sheet was sent to providers from a single institution with a history of prescribing semaglutide. Providers could mark each response as AI generated, accurate, and acceptable for use in patient communication. Survey responses were analyzed in Bluesky statistics software v10.3 using ANOVA analysis.

Results: 7 prescribers completed the 81-question survey with results summarized in Table 1. FDA provided responses were significantly shorter (99 words) than those of either Gemini (186 words) or GPT-4 (252 words), p< 0.001. 85% of all responses were deemed accurate, and there was no significant difference between LLM and FDA responses. Providers incorrectly identified FDA responses as AI-generated in 18% of examples and were often able to identify Gemini (52%) and GPT responses (64%) as AI generated, p< 0.001. Providers were more likely to recommend FDA generated responses (52%) be used in patient communications compared to Gemini (30%) and GPT-4 (30%) responses, p=0.012. Subgroup analysis revealed no significant differences in respondent preferences when accounting for question type or anti-obesity medication.

Discussion: We found that providers evaluating AI and FDA –generated responses to AOM queries found that both sources were equally accurate, and providers could often distinguish FDA-provided responses from AI-generated content. Providers preferred FDA-generated responses in patient communications, possibly due to their reduced word count. When clinicians seek to utilize LLMs in their practice, prompting them to be brief in their responses may optimize the ability to incorporate LLMs into clinical practice.

Note: The table for this abstract can be viewed in the ePoster Gallery section of the ACG 2024 ePoster Site or in The American Journal of Gastroenterology's abstract supplement issue, both of which will be available starting October 27, 2024.

Disclosures:

Thomas Fredrick indicated no relevant financial relationships.

Manik Aggarwal indicated no relevant financial relationships.

Andres Acosta: Amgen – Consultant. Boehringer Ingelheim – Consultant, Grant/Research Support. Busch Health – Consultant. Currax Pharmaceuticals – Consultant. General Mills – Consultant. Gila Therapeutics – Consultant. Nestle – Consultant. Phenomix Sciences Inc. – Intellectual Property/Patents. RareDiseases – Consultant. Rhythm pharmaceutical – Consultant, Grant/Research Support. Satiogen Pharmaceutical – Grant/Research Support. Vivus Inc – Grant/Research Support.

Thomas W. Fredrick, MD, Manik Aggarwal, MBBS, Andres Acosta, MD, PhD. P1452 - Analysis of Provider Preferences for AI-Generated Responses to Anti-Obesity Treatments, ACG 2024 Annual Scientific Meeting Abstracts. Philadelphia, PA: American College of Gastroenterology.